A Guide to Using a Website Data Scraper

At its heart, a website data scraper is a tool built to automatically pull huge amounts of information from websites. The best way to think of it is like having a personal research assistant who can scan thousands of web pages in an instant, plucking out just the facts you need and organizing them into something clean and useful, like a spreadsheet.

What Is a Website Data Scraper and How Does It Work?

So, how does this actually happen? Let's break it down.

A website data scraper solves a very common problem: the internet is an enormous, chaotic library. Manually copying and pasting data from it is not just slow and boring—it’s a recipe for mistakes. A scraper automates that entire chore, transforming messy web content into structured data that’s ready for you to analyze.

Imagine you need to gather the prices of every single smartphone on an e-commerce giant's website. By hand, that’s a soul-crushing task that could take you all day. A scraper, on the other hand, can knock out the same job in minutes by following a simple, yet powerful, three-step process.

The Core Scraping Process

First, the scraper sends a request to the website's server, just like your browser does when you visit a URL. The server then sends back the site’s raw code, which is usually HyperText Markup Language (HTML). This is the blueprint of the page, containing all the text, links, and images you see, but it’s formatted for a browser to read, not a person.

Next up is the most crucial step: parsing. The scraper intelligently combs through all that HTML code to find and isolate the exact pieces of information you told it to look for. It's like telling your research assistant, "Go through these 100 pages and only bring me back the prices. Ignore everything else."

The Power of Parsing: This is where the real magic is. A well-designed scraper can tell the difference between a product title, its price, a customer rating, and a block of marketing copy—even when they're all tangled together in the code.

Finally, the scraper takes all the data it has found and neatly organizes it into a structured format. Instead of a messy blob of code, you get a clean, orderly file like a CSV or an Excel spreadsheet, with everything sorted into columns like "Product Name," "Price," and "Rating."

Why Scraping Is So Valuable

It's no surprise that the demand for these tools is exploding. Businesses are waking up to the power of making decisions based on real-time data. The global web scraping market is growing fast, driven by the need for up-to-the-minute information in competitive fields like e-commerce, finance, and marketing.

While some 2023 estimates valued the market around $363 million, the rapid push for data-driven strategies has blown past those figures. Current projections now suggest the market will soar to roughly $2.83 billion by 2025, with a compound annual growth rate (CAGR) of 14.4% through 2033. This growth shows just how much companies now rely on scrapers for everything from competitive analysis and price monitoring to lead generation. You can read more about the web scraping market growth to see just how fast it's moving.

What’s fueling this growth? Accessibility. You no longer need to be a developer to get in on the action. Modern tools, even simple browser add-ons, make it easy for anyone to start collecting data. With a no-code solution, you can be up and running in just a few minutes.

Ready to see how simple it can be? You can start your first project right now without writing a single line of code by downloading our Ultimate Web Scraper Chrome extension.

What Can You Actually Do With Data Scraping?

It's one thing to understand the what of data scraping, but the real magic happens when you see the how. The true value of scraping isn't in the technology itself, but in how it’s applied to solve real business problems and create a serious competitive advantage.

From hungry startups to established enterprises, companies in every imaginable sector are putting scraped data to work. They're using it to fine-tune their strategies, streamline operations, and uncover opportunities that would otherwise stay hidden. These aren't just abstract theories; they're practical, everyday applications delivering real results.

Fueling Growth in E-commerce and Retail

If there's one industry that lives and breathes data scraping, it's e-commerce. In a market where products, prices, and promotions can change in the blink of an eye, trying to keep up manually is a fool's errand. This is where a website scraper becomes an indispensable part of the team.

Think about an online store that sells electronics. They could set up a scraper to automatically check their competitors' websites several times a day. This firehose of fresh data lets them:

- Master Dynamic Pricing: They can automatically adjust their own prices to stay competitive—maximizing margins on some products while being the best deal on others.

- Track Product Catalogs: They get an inside look at what competitors are launching, what they're discontinuing, and which products are flying off the virtual shelves.

- Monitor Inventory: When a competitor sells out of a hot item, they get an instant alert, opening a window to capture those eager buyers.

This constant flow of intelligence allows a business to react to the market in real-time, not weeks later. With over 3 billion people buying things online by 2023, the demand for this kind of automated insight has exploded. It’s no surprise the web scraping software market is projected to reach $782.5 million in 2025 and is on a path to soar past $3.5 billion by 2037, all driven by the relentless need for e-commerce data. You can start automating these tasks today by downloading our Chrome extension: download our Ultimate Web Scraper Chrome extension.

Revolutionizing Real Estate and Finance

It’s not just about online shopping, either. Data scraping has completely changed the game in industries like real estate, which once ran on slow, disconnected information.

A real estate agency can use a scraper to pull property listings from dozens of regional sites, Zillow, and other platforms into one clean, unified database. Suddenly, their agents have the most current information on new listings, price drops, and recent sales, all in a single dashboard.

The Big Picture: Data scraping brings order to chaos. Instead of an agent juggling 20 different websites for property data, they can check one central source. This saves countless hours and helps them serve clients better and faster.

Over in the world of finance, analysts scrape news outlets, social media feeds, and official reports to get a read on market sentiment. By tracking how often positive or negative words appear in relation to a specific stock, they can build sophisticated models to anticipate market shifts.

Supercharging Marketing and Lead Generation

For sales and marketing teams, a good data scraper is like a lead-generation superpower. The old way of manually hunting for prospects is painfully slow and often yields poor results.

One of the most effective uses is building automated lead generation strategies to gather prospect info without the manual grind. For example, a B2B software firm could scrape professional networking sites or industry directories to instantly build a highly targeted list of potential clients who perfectly match their ideal customer profile.

Likewise, marketers can scrape customer reviews from sites like Amazon or Trustpilot. By analyzing this feedback at scale, they can quickly pinpoint what customers love about their products and—just as importantly—what needs fixing. It's an incredible source of direct, honest feedback for improving both products and marketing messages. You can simplify this entire process by downloading our Ultimate Web Scraper Chrome extension.

How to Choose the Right Website Data Scraper

Picking the right tool for a web scraping job can feel overwhelming. You’ve got everything from simple browser add-ons to powerful, enterprise-grade platforms. So, where do you even start?

The truth is, it's not as complicated as it seems. The perfect choice really boils down to three things: your project's goals, how comfortable you are with code, and your budget. By thinking through these factors, you can zero in on a scraper that fits what you need, whether you're spying on competitors, tracking prices, or building a list of sales leads.

Assess Your Technical Expertise

First, let's be honest about your tech skills. Are you a developer who lives and breathes code, or does the idea of writing a script sound like a nightmare? This is the most critical question because it immediately splits the world of scraping tools in two.

- For the Non-Coder: If you're in marketing, research, or you're a business owner who just needs data, you'll want to look for no-code or visual scrapers. These are usually browser extensions or desktop apps with a point-and-click interface. You literally just click on the data you want to grab from a webpage. It's that simple.

- For the Developer: If you’re comfortable with programming, you can build your own scrapers using libraries like Beautiful Soup for Python. This approach gives you total control and flexibility, but it also means you're on the hook for building, running, and fixing everything yourself.

For most people, a powerful no-code tool hits that sweet spot between ease of use and getting the job done right. To get started without any coding, you can download our Ultimate Web Scraper Chrome extension.

Define the Scale and Complexity of Your Project

Next up, think about the job itself. Are you just trying to pull a few product names off a single page? Or do you need to extract thousands of records every day from a complex website that's constantly changing?

The size of your task is a huge factor. The global web scraping software market was valued at $718.9 million in 2024 and is expected to hit $2.2 billion by 2033. That explosive growth is happening for a reason—businesses have vastly different needs, from small one-off jobs to massive, ongoing data pipelines.

Key Takeaway: A simple browser extension is perfect for a quick, one-time data pull. But if you need to scrape hundreds of pages on a set schedule, you’ll need a more robust tool built for automation and scale.

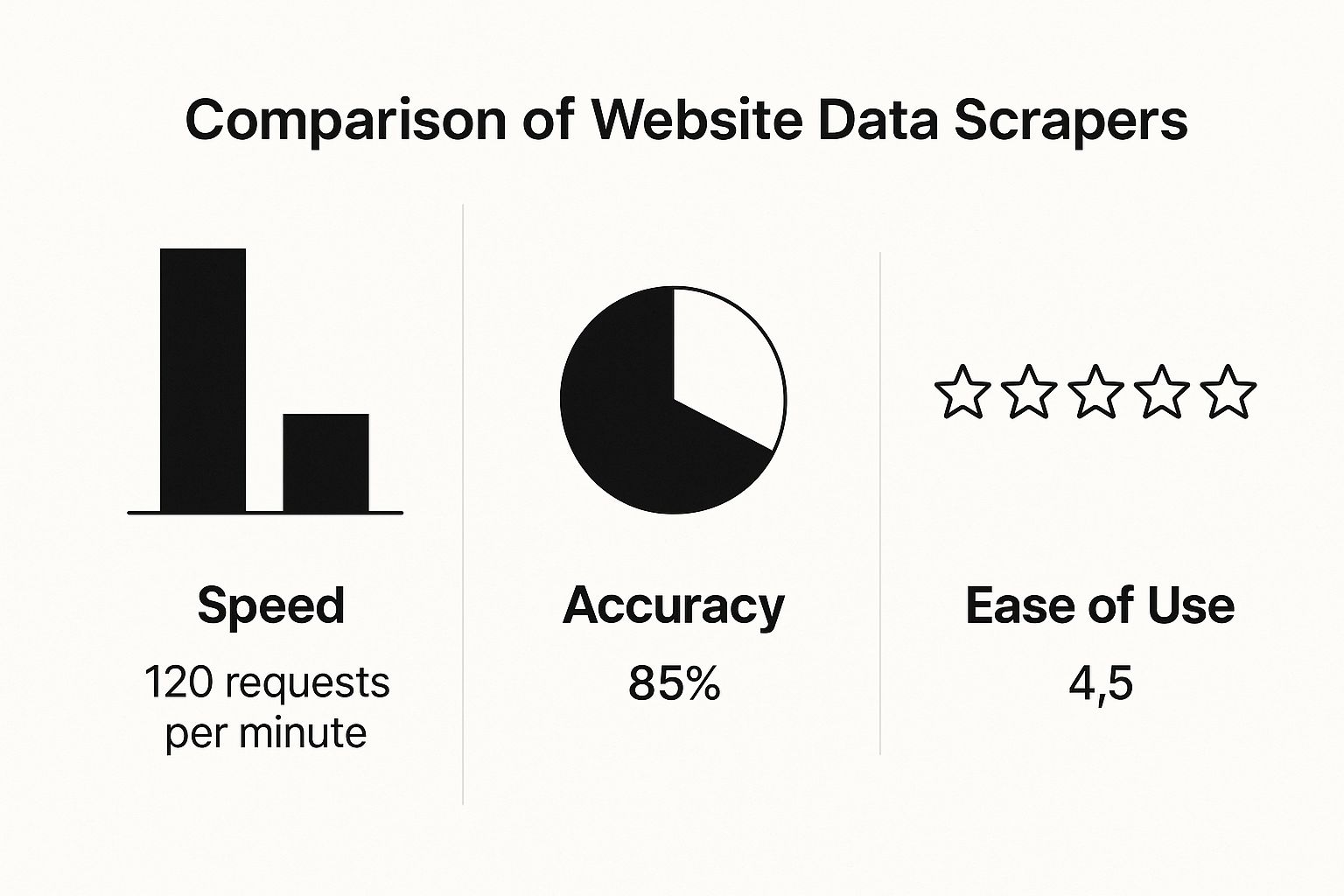

This chart gives you a quick visual on how different types of scrapers stack up against each other.

As you can see, there's often a trade-off. The easiest tools might not be the fastest for huge jobs, while the most powerful ones can take a bit more time to master.

Comparing Your Main Options

Once you know your technical comfort level and the size of your project, you can start looking at the main types of scraping tools. To help you sort through them, we’ve put together a quick comparison table.

Comparison of Website Data Scraper Types

This table compares the key features, pros, and cons of different types of web scraping tools to help you choose the best fit for your project.

| Scraper Type | Best For | Technical Skill | Cost | Scalability |

|---|---|---|---|---|

| Browser Extension | Small, quick tasks; beginners | Very Low (No-code) | Free to Low | Low |

| Desktop Application | Medium-sized projects; scheduled scrapes | Low to Medium | Low to Medium | Medium |

| Cloud-Based Platform/API | Large-scale, recurring jobs; enterprises | Medium to High | Medium to High | High |

This breakdown should give you a clear idea of where to start looking. For a more detailed analysis, you can also check out our complete guide to the best web scraping tools out there. Let's dig a little deeper into each option.

- Browser Extensions: These are lightweight tools that live right in your Chrome or Firefox browser. They are incredibly easy to use, making them a fantastic starting point for anyone new to scraping.

- Desktop Applications: Think of these as a step up from extensions. You install them on your computer, and they offer more horsepower for bigger projects and often include handy features for scheduling scrapes.

- Cloud-Based Platforms & APIs: This is the big league. These services run the scrapers on their own powerful servers, so you can tackle massive projects without bogging down your own machine. They’re built for reliability and scale, handling things like IP rotation and CAPTCHAs for you.

For most people just dipping their toes into data scraping, a browser extension is the way to go. It’s a hands-on, low-risk way to learn the ropes and get valuable data almost instantly. To get started now, download our Ultimate Web Scraper Chrome extension.

Your First Web Scraping Project Step-by-Step

Theory is great, but the best way to really understand how a website data scraper works is to get your hands dirty. Let's walk through your first project together. This guide is built for complete beginners, and we'll pull real data from a website without writing a single line of code.

The image above shows what a simple, no-code scraper looks like in action. Forget complex programming; these tools have made data extraction as easy as pointing and clicking. This really opens the door for anyone who needs web data, not just developers.

Want to follow along? You can get set up in less than a minute. Just download the Ultimate Web Scraper Chrome extension. You’ll be ready to scrape your first dataset before you even finish this guide.

Step 1: Identify the Data You Need

Every good scraping project starts with a clear goal. What information are you actually after?

For this walkthrough, let's put on the hat of an e-commerce analyst. Our mission is simple: scrape all the product names and their prices from a demo online store. The idea is to gather some quick competitive intelligence.

Having a focused objective like this is key. It stops you from getting overwhelmed and collecting a bunch of data you don't need. So, we're targeting just two things: product names and prices.

Step 2: Target the Elements with a Visual Selector

Now that we know what we want, we need to show the scraper where to find it. This is where no-code tools are brilliant.

Instead of digging through a site’s HTML, you just point to the data you want. Here’s how it works:

- Go to the e-commerce product page you want to scrape.

- Open the Ultimate Web Scraper extension from your browser toolbar.

- Click on the name of the first product. You’ll see the tool intelligently highlight all the other product names on the page. It instantly recognizes the pattern.

- Do the same for the prices. Click on the first product's price, and the tool will automatically select all the other prices.

In just a couple of clicks, you’ve trained the scraper. It now knows the exact pattern to follow for every item on the page.

The Point-and-Click Advantage: This visual selection is what makes modern scraping so accessible. You don't need to know what a CSS selector is or how to read HTML. If you can see it and point to it, you can scrape it.

Step 3: Run the Extraction and Export

With our data points selected, we’re ready for the magic. Just hit the "Scrape" button inside the extension. The website data scraper will immediately get to work, pulling all the highlighted information into a neat table right in front of you.

You can quickly glance at the preview to make sure everything looks right. The final step is getting that data out. Most tools, including this one, offer a few formats:

- CSV: The go-to format for anyone using Google Sheets or Microsoft Excel.

- JSON: A favorite for developers who need to plug this data into another app.

- Copy to Clipboard: Perfect for a quick paste into a report or document.

And that's it! You've successfully turned a chaotic webpage into a structured spreadsheet in under five minutes. This simple workflow is the foundation of most no-code web scraping projects and proves just how much you can accomplish with these powerful tools.

Scraping Data Legally and Ethically

A website data scraper is an incredibly powerful tool, but with great power comes great responsibility. Think of it like this: you've been handed the keys to a library, allowing you to access vast amounts of information. But there are rules, both written and unwritten, that you need to follow.

This isn't just about avoiding trouble. It's about being a good digital citizen and ensuring the web remains an open, useful resource for everyone. Let’s walk through the key things you need to know to scrape data confidently and, most importantly, respectfully.

Always Read the Terms of Service

Your first stop should always be a website's Terms of Service (ToS) or "Terms of Use." This document is the legal handshake between a site and its users, and it often lays out clear rules about automated access and data gathering.

Frankly, many sites flat-out prohibit scraping in their ToS. While the legal muscle behind these terms can sometimes be a gray area, ignoring them is the fastest way to get your IP address blocked. If a site explicitly says "no," your best bet is to respect their wishes and find another data source.

Understand the Robots.txt File

Picture the robots.txt file as a website's welcome mat. It's a simple text file that site owners place on their server (you can usually find it at website.com/robots.txt) to give instructions to automated bots—from Google’s crawlers to your data scraper.

This file spells out which parts of the site are okay for bots to visit and which areas are off-limits. For instance, a site might use its robots.txt to keep bots out of user profile pages or internal search functions.

The Golden Rule of Robots.txt: This file isn't legally binding, but respecting its rules is a universal sign of good faith in the scraping world. Ignoring it is like waltzing past a "Staff Only" sign—it's just bad form and will likely lead to you being shown the door.

Checking this file should be step one for any scraping project. It shows you respect the site owner's boundaries and saves you from wasting time trying to access pages you shouldn't.

Core Principles for Ethical Scraping

Beyond the formal rules, ethical scraping is really about being considerate. Websites run on servers with limited resources. An aggressive scraper hitting a site with a flood of requests can slow it down for everyone or even cause it to crash.

To make sure you're part of the solution, not the problem, just follow a few common-sense practices:

- Scrape at a Polite Pace: Don't hammer a server with hundreds of requests a second. It's not a race. Introduce delays between your requests (even just 1-2 seconds) to mimic how a human would browse the site. This dramatically reduces the strain on their server.

- Identify Your Bot: When you can, set a descriptive User-Agent in your scraper's requests. This tells the website admin who you are and what you're doing. A little transparency goes a long way.

- Scrape During Off-Peak Hours: If you have a massive scraping job planned, try running it late at night or whenever the site has the least traffic. This minimizes your impact on actual human visitors.

- Never Scrape Private Data: This is the big one. Never, ever try to collect personally identifiable information (PII) like names, emails, or phone numbers, especially anything behind a login screen. Stick exclusively to information that is already public.

When you adopt these habits, you're acting less like a brute-force bot and more like a respectful researcher. This keeps you in the clear and makes it less likely that websites will have to put up heavy-duty anti-scraping walls. For example, scraping public business information is a very common and generally accepted practice. If you want a real-world example of these principles in action, our guide on how to scrape Google Maps legally and ethically breaks it down step-by-step.

In the end, scraping ethically is about sustainability. By gathering data the right way, you help keep the web an open and valuable resource for years to come. For simple, respectful scraping of public data, a well-designed browser tool can be your best ally. Download our Ultimate Web Scraper Chrome extension to get started with a tool built for efficient and considerate data collection.

How to Overcome Common Scraping Obstacles

Even with the best website data scraper, getting clean data isn't always a walk in the park. Sooner or later, you'll run into a website that seems to put up a fight. Knowing why this happens and how to work around it is the key to turning a frustrating project into a successful one.

Most of these hurdles fall into a few common buckets. Let's break down what you'll likely face and talk about practical ways to get past them, so potential roadblocks become nothing more than minor speed bumps.

Handling Dynamic JavaScript Content

Have you ever loaded a webpage, only to watch the most important information appear a second or two later? That's usually JavaScript at work, loading content after the initial page structure is in place. A basic scraper that just grabs the first batch of HTML will completely miss this data—it shows up, gets the code, and leaves before the real content even arrives.

You'll see this all the time on modern, interactive sites. Think of social media feeds that load more posts as you scroll or product pages with infinite scrolling.

The solution? Use a scraper smart enough to wait for the page to finish rendering, just like a person would. Many advanced scraping tools and browser extensions, including ours, include "wait" or "delay" functions. These settings tell the scraper to pause for a few seconds, giving all the JavaScript elements time to load onto the page before the extraction begins. Download our Ultimate Web Scraper Chrome extension to handle dynamic content with ease.

Navigating Through Multiple Pages

Another classic challenge is pagination. This is simply when data, like a long list of products or search results, is split across several pages. Having to scrape each page one by one would completely defeat the purpose of automation.

A good website data scraper can handle this no problem. The best tools have features built specifically for navigating from one page to the next.

- "Next" Button Clicks: You can teach the scraper to find and "click" the "Next" button on each page, repeating the process until it runs out of pages.

- URL Pattern Recognition: Often, page URLs follow a predictable pattern, like

site.com/products?page=1, thenpage=2, and so on. A smart scraper can be set up to automatically cycle through these numbered URLs.

For most people, a powerful but simple no-code tool can manage pagination with just a few clicks. For instance, the Ultimate Web Scraper Chrome extension is built to handle these multi-page jobs with an easy point-and-click setup.

Dealing with Anti-Scraping Measures

Some websites go a step further and actively try to block automated traffic to protect their data or keep their servers from getting overwhelmed. If you’ve ever had to check a CAPTCHA box to prove you're not a robot, you've encountered an anti-scraping measure firsthand.

Why Sites Use Blockers: Websites use these techniques to prevent aggressive bots from overloading their servers, to protect copyrighted content, and to stop competitors from scraping sensitive information like pricing.

These defenses can be as simple as blocking an IP address that makes too many requests or as complex as analyzing browsing behavior to detect non-human patterns. While getting around the toughest systems can be a real cat-and-mouse game, here are a few common strategies to stay under the radar:

- Use Proxies: A proxy sends your scraper's requests through different IP addresses. To the website, it looks like the traffic is coming from many different users, which makes it much harder to single out and block your scraper.

- Slow Down Your Requests: As we covered in the ethics section, hammering a server with rapid-fire requests is a huge red flag. Always build delays between your requests to mimic how a human would actually browse the site.

- Rotate User-Agents: A User-Agent is a bit of text that tells a website which browser you're using. By switching between different User-Agents (like Chrome, Firefox, and Safari), your scraper will look less like a single, repetitive bot and more like different organic visitors.

Got Questions About Data Scraping? We’ve Got Answers.

Jumping into data scraping for the first time usually brings up a handful of questions. It's completely normal. To help you get started on the right foot, we've put together answers to the things people ask us most often. Think of this as your quick-start guide to clear up any confusion.

Is It Legal to Use a Website Data Scraper?

This is almost always the first question, and it's a good one. The short answer is: yes, scraping publicly available information is generally legal. But the devil is in the details. The key is to be a good internet citizen and respect the website's rules.

Before you start, always check the site’s Terms of Service and see what its robots.txt file says. A golden rule to live by is to never scrape copyrighted material or private personal data. If you're planning a large-scale project or the data feels sensitive, it's always smart to talk to a legal expert first.

Can I Scrape Data from Literally Any Website?

Technically, you can try to scrape any website, but you won't always succeed. Modern websites are getting smarter. Many are built with complex JavaScript or have anti-bot systems specifically designed to stop scrapers in their tracks, which can make things tricky.

For most common tasks, like gathering product prices or customer reviews, a good, user-friendly tool will work on a huge number of sites. But for the really tough, heavily guarded websites, you might need a more advanced tool with features like proxy rotation to get the job done.

The Big Shift: The best part? You no longer need to be a developer to do this. Powerful no-code tools have opened up web scraping to everyone, making it possible for anyone to gather public data without writing a single line of code.

Do I Really Need to Know How to Code?

Nope, not anymore. While developers can (and do) build powerful custom scrapers with languages like Python, that's no longer the only way. The game has completely changed, and today, anyone can use a website data scraper thanks to intuitive, no-code solutions.

Visual tools and browser extensions are built for simplicity. Our own Ultimate Web Scraper, for instance, lets you just point your mouse and click on the data you want. It's that easy.

What’s the Best Format for Scraped Data?

The best format really comes down to how you plan to use the data. For most people, it boils down to two excellent choices: CSV and JSON.

- CSV (Comma-Separated Values): This is your go-to for analysis. You can open a CSV file directly in spreadsheet programs like Microsoft Excel or Google Sheets. It’s perfect for when you want to sort, filter, or create charts from your data.

- JSON (JavaScript Object Notation): This one is a favorite among developers. It's a clean, structured format that’s ideal for feeding scraped data into another application, a database, or a custom program for more advanced uses.

Published on